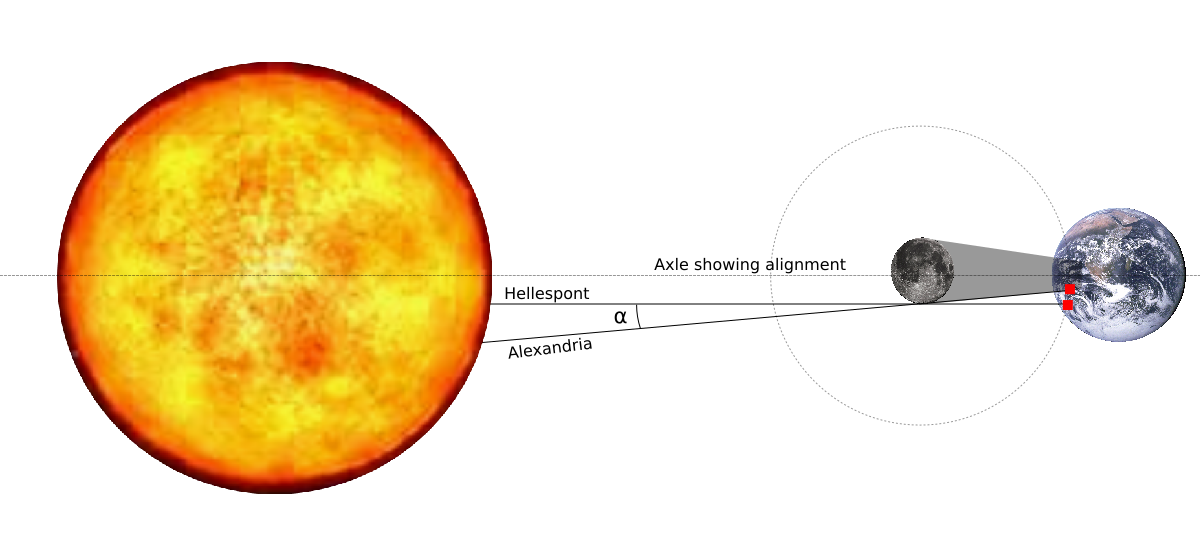

The above image shows the trigonometry used by Hipparchus in 190 BCE to calculate the Earth-Moon distance. The computation used time, two wells in Hellspond and Alexandra, an eclipse, and a little bit of math. Note that since then, such a natural setting never occurred again! The position of eclipses since then would all have been off.

Measurements in astronomy began even before the word astronomy was coined. Early astronomical work by ancients mainly included creating a calendar so that we could have a concept of time. The earliest records of measurements were from the Babylonian Empire, where they recorded the position of the planets on clay tablets. They used this information to determine seasons and the best times to plant and to harvest.

The first known attempt at astronomical measurement was by Hipparchus during the solar eclipse on March 14th, 190 b. c. e. (shown above). He had the angle of inclination of the moon from Istanbul and Alexandria. He used this information to calculate the moon’s distance from the Earth and found that it was between 62 and 73 Earth radii. Considering the tools of measurement used during this time were not as advanced, this was a noteworthy attempt. The correct value today is about 59 Earth radii (the distance varies as the orbit is not a perfect circle).

The Greeks were also able to measure the radius of the Earth even without modern-day instruments such as the telescope. They observed angles and distances between two towns to find that the circumference of our planet was 46,670.976 km (29,000 miles). Today, we know the circumference of the Earth to be 40,233.6 km (25,000 miles) which isn’t far from what the Greeks speculated.

Modern Day Astronomy Measurements

Willebrord Snell was a Dutch scientist who accurately calculated the circumference of the Earth in the year 1617. This discovery made it possible for people to measure distances between several places on the continent. It also gave us baselines that we could now use to calculate the distance between our Earth and other planets within our solar system.

The French Academy sent scientists to French Guyana while other scientists remained in Paris. Using telescopes, they measured the distance between Mars and Earth. They also used Kepler’s laws to measure the distance between the other known planets in the solar system and to find out the radius of our planet.

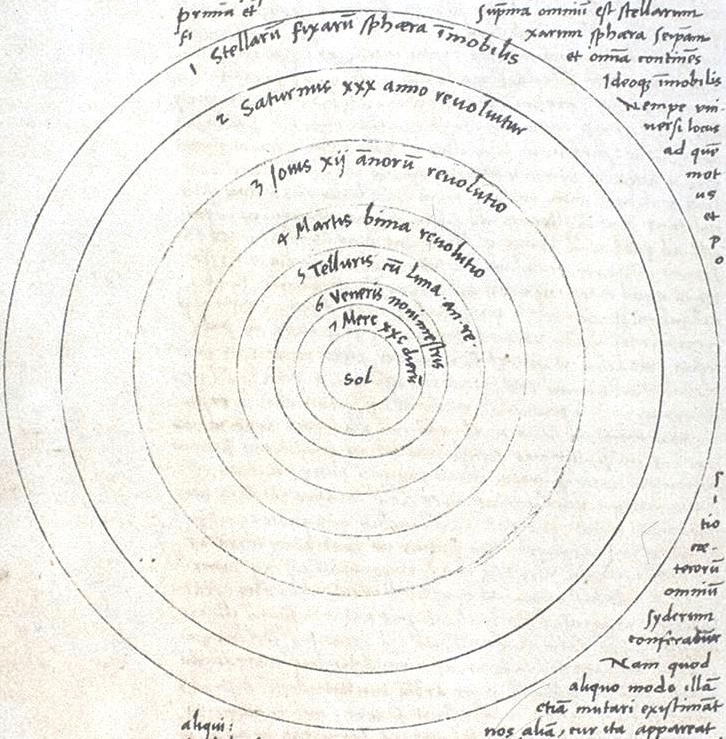

The history of Kepler’s Law

Nicolaus Copernicus came up with the theory that the Earth was indeed a sphere, however, the Earth rotated from west to east and that this movement is what gave the impression that the Sun, Moon, and Stars rose in the east. In actuality, the stars did not move, and the Sun was at the center the universe (at the time the Universe was limited to our solar system since stars were not yet recognized as other sun like objects) and all the planets just turned around it. This theory was heavily criticized at the time.

Tycho Brahe was one of the scientists who embarked to disprove this theory. He conducted sidereal measurements, these are measurements of the positioning of planets relative to the background stars. On examining the data gathered, one of Tycho’s students changed his mind and became an advent supporter of Copernicus and this student was Johannes Kepler. He used the calculations to determine that Mars orbited elliptically around the Sun. He found that the time it took the Earth, Mars, and the Sun to move from being in a straight line to forming a right-angled triangle was 105.5 days. In this period, the Earth would have moved by 104 degrees, while Mars would have moved 55 degrees. Therefore, the right angle contained 49 degrees (104–55). Since the value of the distance between the Earth and the Sun was unknown, he defined this measurement as 1 Astronomical Unit.

Astronomical Unit

AU or Astronomical Unit is the average distance between the Earth and the Sun. This measurement is used to measure distances between stars and other interstellar objects in our universe. This distance is approximately 150 million kilometers. The closest planet to the Sun, Mercury, is about 1/3 AU away from it while Pluto is 40 AU away from the Sun.

To give more perspective, the Earth is always at different AUs to the Sun depending on the month. The Earth does not orbit the Sun in a perfect circle. In January, the Earth is at perihelion and is at its closest to the Sun. At this time it is 0.983 AU from the Sun. While in July, it is at its furthest from the Sun and is at aphelion being 1.017 AU from the Sun.

| Planet Name | AU Position |

|---|---|

| Mercury | 0.387 |

| Venus | 0.723 |

| Earth | 1 |

| Mars | 1.524 |

| Jupiter | 5.203 |

| Saturn | 9.582 |

| Uranus | 19.201 |

| Neptune | 30.047 |

Light Years and Parsecs

A light year is a unit of measurement that is equal to approximately 10 trillion kilometers. The large distance between stars and planets necessitated the need for other units of measurements such as light years and parsecs. A light year is the distance that a photon of light travels through a vacuum in a Julian year. This is a distance of 9.46 × 1012 km or 63,200 AU. A parsec on the other hand is a distance of 3.086 × 1013 km or 3.26 light years, this is approximately 206,265 AU. The parsec is the more preferred unit of measurement by astronomers.

It is important to note that a light year is a measure of distance and not a measure of time as it is sometimes mistaken for.

Instruments that are currently available can only measure astronomical distances up to 100 parsecs. This is a parallax of up to one-hundredth of a second. The satellite Hipparcos was launched in 1989 and it allowed astronomers to measure up to 1,000 parsecs (one-thousandth per second). This allowed astronomers to measure the distances between many more stars.

To help you better understand a light year, the nearest star to the Earth that’s not the Sun is Alpha Centauri. This star is 4.3 light years or 40.2336 trillion miles away. With the speed of light being 299,792 kilometers per second, it takes light 4.3 years from Alpha Century to reach the Earth.

Distance

Before calculations and mathematics, distance was measured using body parts, for example, foot and cubit. A cubit is the distance from the tip of the middle finger to the bottom of the elbow on the same arm. This method of measurement was said to have been used by ancient Egyptians, Mesopotamians, and Indians. Foot was being used by ancient Greeks and Romans. These methods were not accurate since there wasn’t a standard measurement of a “foot” or a “cubit”.

Charlemagne in the 8th century tried to find a standardized measurement for distance using what he called “toise de l’Écritoire,” which was approximately 6 feet. This attempt was unfortunately not successful.

In the 17th century, there were several attempts to get a standardized unit of measurement. Tito Livio Burattini published a book in 1675 that talked of a distance unit that he named “metro catolico”. This “meter” was less than what we call a meter by half a centimeter.

In 1688, John Wilkins proposed that a decimal system be used to measure distance.

The French were however the first to make strides in the measurement of distance. The government noted that there was a problem with different systems of measurement which caused confusion. One of the first proposals was to use a pendulum.

The government then contracted scientists who formed a commission chaired by Jean-Charles Boda. In the commission was Joseph-Louis Lagrange, Pierre-Simon Laplace, and Gaspard Monge. The scientist took to measuring the distance between two points; Belfry of Dunkirk and Montjuic Castle. This was the distance from the North Pole to the Equator. Though the two points were not on the same longitude, only a small difference was noted. The two scientists took six years (1792 to 1798) to measure the distance. They then ordered several platinum bars and the bar that was the closest to one ten-millionth of the distance was chosen and appointed the standard meter bar. It was known as the “metre des Archives”. Later it was discovered that this measurement was not “one ten-millionth of the distance from the North Pole to the Equator”.

Though this unit was created, the metre was not accepted by the entire country of France until the mid-19th century. In 1816, the Netherlands also started using the French unit of measurement. As more countries adopted this system, there was a need to find a more accurate definition of the metre. A diplomatic conference in France formed the “Bureau international des poids et mesures” (BIPM). This organization made new prototypes that were made of 90% platinum and 10% iridium. One of the prototypes was found to be the exact distance and was the official prototype metre.

Because it is costly and not practical to create prototypes of such high quality, it was later decided that the metre should be defined using something that anyone can measure at any time and anywhere. We first chose Krypton-86 as it radiates and finally settled for the distance that light travels in a vacuum in 1/c of a second in 1983. This is why the speed of light is exactly 299,792,458 m/s, the metre and light are one to one related:

In 2019, the metre was changed to a more robust definition, but it remains the exact same distance. Just like with clocks, we now use a cesium atom which allows us to tie both: the distance and time in the definition of the metre. Here is the new equation:

Weights

Weight and mass are often confused when speaking. The weight of an object is the force of gravity on the object. However, the mass of an object is the amount of matter in the object. Our weight depends on the amount of gravitational pull on our bodies. Our weight on Earth is different from our weight on Mars due to the difference in gravity.

In ancient Europe, mass was called “pounds”. The French called it the livre which was the same as the pound. A pound was approximately 328.9 grams. In England, there were different pounds such as the Troy pound, the merchant’s pound, the London pound among others. These pounds weighed between 350 and 467 grams. Also in France, various livres were used such as; livre esterlin and livre poids de marc and livre de Paris. These were between 367.1 grams and 489.5 grams.

In 1668, John Wilkins suggested that weight could be measured against a known volume of water. The French were once again instrumental in standardizing what mass and weight were. The Commission of Weights and Measures was formed in 1793. The commission decided that the unit of mass would be “a cubic decimeter of distilled water at 0 degrees Celsius”. They named this grave. Other units were created such as the gravet (1/1000 of a grave), and a bar (1000 graves).

Later on, in 1793, a gramme was coined to replace the gravet and the bar. A grave was 1,000 grams, which was a kilogram. A gravet was therefore a gram. An appropriate prototype of this weight was created in 1799 was made in platinum. This version would be the definition of a kilogram for 100 years.

A new prototype was later created in 1879 and was made of 90% platinum and 10% iridium. In 1889, it officially became our current definition of the kilogram.

Time

| Year | 6/30 | 12/31 |

|---|---|---|

| 1972 | +1 | +1 |

| 1973 | — | +1 |

| 1974 | — | +1 |

| 1975 | — | +1 |

| 1976 | — | +1 |

| 1977 | — | +1 |

| 1978 | — | +1 |

| 1979 | — | +1 |

| 1980 | — | — |

| 1981 | +1 | — |

| 1982 | +1 | — |

| 1983 | +1 | — |

| 1984 | — | — |

| 1985 | +1 | — |

| 1986 | — | — |

| 1987 | — | +1 |

| 1988 | — | — |

| 1989 | — | +1 |

| 1990 | — | +1 |

| 1991 | — | — |

| 1992 | +1 | — |

| 1993 | +1 | — |

| 1994 | +1 | — |

| 1995 | — | +1 |

| 1996 | — | — |

| 1997 | +1 | — |

| 1998 | — | +1 |

| 1999 | — | — |

| 2000 | — | — |

| 2001 | — | — |

| 2002 | — | — |

| 2003 | — | — |

| 2004 | — | — |

| 2005 | — | +1 |

| 2006 | — | — |

| 2007 | — | — |

| 2008 | — | +1 |

| 2009 | — | — |

| 2010 | — | — |

| 2011 | — | — |

| 2012 | +1 | — |

| 2013 | — | — |

| 2014 | — | — |

| 2015 | +1 | — |

| 2016 | — | +1 |

| 2017 | — | — |

| 2018 | — | — |

| 2019 | — | — |

| 2020 | — | — |

| Year | 6/30 | 12/31 |

adjustment and then we got

another +27 leap seconds

throughout the years.

This is the difference

between our atomic clocks

and real Earth time.

Early humanity used to record time with the phases of the moon. This was approximately 30,000 years ago. Amazingly, newer concepts of time began forming only 400 years ago. Ancient civilizations used to measure time using celestial bodies, namely the Sun and the Moon. With the setting and the rising of the Sun, people knew that a new day had begun.

After noting a day, they were also able to determine that it took 27 days to 30 days for the phases of the moon to reoccur. Early humanity divided the day into simple systems. This was early morning, mid-day, late afternoon, and night. However, we continued to evolve, and we needed to have advanced measures of time.

Currently, we divide a day into 24 hours, an hour into 60 minutes, and a minute into 60 seconds. This division can be traced back to the Babylonians who thought that there was a mystical power with multiples of 12. They divided the parts of the day that the Sun shone into 12 and when the Sun set into another 12. Their mathematicians also divided circles into 360 parts and each part into 60. This influenced the decision to divide these 24 parts into 60, and those parts further down by 60 creating minutes and seconds.

5,000 to 6,000 years ago North Africans and people in the Middle East started created clock making techniques. These included fire clocks that would burn through knotted ropes. It would measure the amount of time the fire would take to burn from one knot to another. c. 1,500 BC sundials, which was a more precise way of measuring time throughout the day, started to appear along basic hourglasses, which were more precise to measure short period of time repetitively.

The first mechanical clocks are said to have been created in the 14th century. A device called an escapement was created. It used the theory of gravity by attaching a falling weight that was slowed down with a cogwheel that moved at one tooth of the cogwheel per second.

Galileo Galilei noticed that a pendulum had a constant swing and rhythm. He used this theory to use a pendulum to create a clock. This technique was also adapted by other scientists, including astronomer Christian Huygens.

Isaac Newton also studied time. However, a definition was only made in the 20th century with Albert Einstein’s studies on relativity. He gave time a definition, “time is the fourth dimension of a four-dimensional world consisting of space (length, height, depth) and time”.

In the 1930s, an improvement of the pendulum was made by the creation of the quartz-crystal clock.

Today we use atomic clocks. Lord Kelvin actually had the idea of such clocks in 1879, but it took us until 1949 to build a first atomic clock. The first models were less accurate than the quartz clock. It took us another 6 years to get a useful atomic clock.

The speed of rotation of the Earth around the Sun varies very slightly year over year so we have to adjust our clocks slightly every now and then. To do so we add or remove 1 second, on June 30 or December 31. On most years, though, no seconds are added. In 1972, we added 2 seconds in the same year. So far we never subtracted a second (i.e. the Earth is slowing down, not accelerating).

When first started using our atomic clocks, we did not have that many computers in the wild, so we did not have leap seconds. After some time, though, it was obvious that was going to be a problem. In 1972, it was decided to define UTC as the atomic time and any other human clock to follow a standard clock adjust to match Earth time one to one. At that point, the two clocks had already drifted by 10 seconds so current time would be UTC + 10s at the beginning of 1972.

Since 1972, we added another 27 seconds so the error between UTC and current Earth time is now of 37 seconds. This gap is likely to continue to grow over time. Although on average we have been adding a new leap second every 21 months, the events are not constant. It at times happen often (1972 to 1998) and at times rarely (1999 to 2020).

Conclusion

Physicists and Astronomers have made tremendous contributions to measurements used on Earth and in space. We’re constantly learning and discovering, it is only a matter of time before we can expand the things that we can measure.

Leave a Reply